Server Setting by en

Home Tech Setup

Objective: The goal is to document the technical environment and setup used at home, providing inspiration and guidance for readers who may want to create a similar setup.

Expectations: As a Data Scientist, some aspects of the setup may be incomplete or not fully optimized. Feedback is always welcome, and I plan to update the content continuously based on suggestions and improvements.

Overview

1. Introduction

- Background for writing this blog post

- While setting up various technical environments at home, I realizedhow challenging it can be to locate related resourceswithout proper documentation.

- I aim to share reference materials and setup tips to help readers build or improve similar environments.

- Features of the technical setup

- The setup utilizes multiple operating systems, experimenting with various applications across each OS.

- Expectations from readers

- I would greatly appreciate feedback and suggestions for improving the technical setup.

2. Hardware Configuration

1) Introduction to Equipment

Equipment | Model | cpu | GPU | Memory | Storage | OS | |

|---|---|---|---|---|---|---|---|

mac mini | mac mini M1, 2020 | 4 core Apple Firestorm 3.2 GHz + 4 core Apple Icestorm 2.06 GHz | 8 Core Apple G13G 1.28 GHz + NPU : 16 Core 4 gen Neural Engine | 16GB | ssd:256G + ssd(nvme) : 500G+ hdd: 1T | Sequoia - 15.1.1(24B91) | |

raspberry 3 | Raspberry Pi 3 Model B Rev 1.2 | 1.2 GHz ARM Cortex-A53 | Broadcom VideoCore IV MP2 400 MHz | 1 GB LPDDR2-450 SDRAM | sd : 64G | Debian GNU/Linux 12 (bookworm) - Linux raspberrypi 6.6.31+rpt-rpi-v8 | |

raspberry 4 | Raspberry Pi 4 Model B Rev 1.1 | 1.8 GHz ARM Cortex-A72 MP4 on BCM2711 SoC | Broadcom VideoCore VI MP2 500 MHz on BCM2711 SoC | 4 GB LPDDR4-3200 SDRAM | sd :64G | Ubuntu 20.04.3 LTS | |

ordroid | ODROID-HC4 | Quad-core Cortex-A55 | ARM G331 MP2 GPU | 16G | sd : 32G + hdd : 6T | Ubuntu 20.04.6 LTS - Linux odroid 4.9.337-92 | |

window notebook | HP Pavilion - 14-al150tx | i7-7500U (2.7GHz) - 2 Core | 940MX | DDR4 memory (4GiB) | ssd : 256G + hdd : 1T | ssd : 256G + hdd : 1T | Windows 10 |

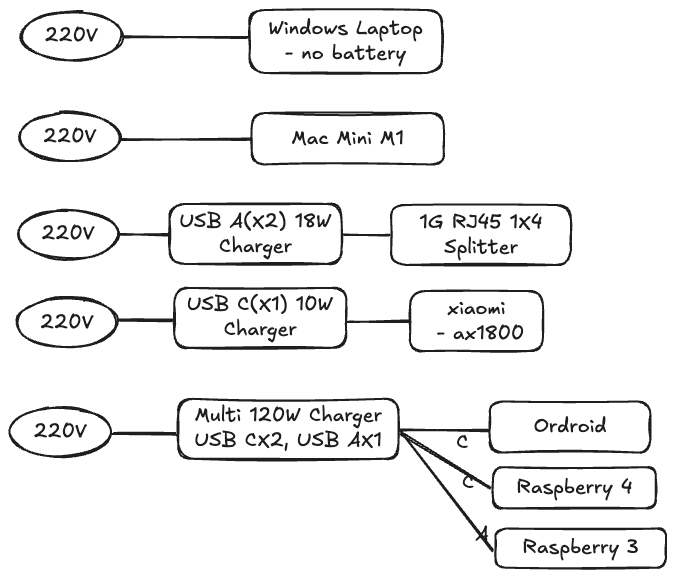

2) Hardware Layout and Connections

- Router: Xiaomi AX1800

- Wired Connections: Windows, Raspberry Pi 4, Raspberry Pi 3, Odroid

- Wireless Connections: Mac Mini

3) Power connection

3. Software

- 1) Applications

- Installed on All PC:

- Docker, cAdvisor, Portainer Agent (except macOS: runs as host), rclone

- Installed on Specific PC:

- macOS: Airflow, Portainer

- Raspberry Pi 4: Cronicle, Grafana, Ghost, Redis (+ Exporter)

- Raspberry Pi 3: Vault

- Odroid: Prometheus, PostgreSQL (+ Exporter), MariaDB (+ Exporter), MongoDB (+ Exporter)

- Windows 10:

- WSL

- WCS: windows_exporter (manually run, not set as a service)

- Installed on All PC:

- 2) Development Environment

- VSCode:

- Local VSCode + GitHub

- Installed

codeCLI to enable remote access via vscode.dev

- Project Management:

- Managed using Obsidian

- VSCode:

4. Network & Monitoring

- 1) Basic Network Structure

- Connected to the Xiaomi router with IP range

192.168.0.XXX→ Server Network - Connected to the IPTime router with IP range

192.168.1.XXX→ Network for devices like TVs - Monitoring with Prometheus + Grafana

- Uses cAdvisor and various types of Exporters

- Currently focused on monitoring (data collection) without setting up alarms

- Connected to the Xiaomi router with IP range

2) Monitoring and Management

5. Data Management and Backup

- Data Storage and Backup Approach

- Currently, no dedicated backup system is in place.

- Data is stored on the HDD (or SSD) of each server.

- A 6TB HDD is available on the Odroid, which is currently being used as storage.

6. Use Cases

- Current Server Use Cases

- Recording EBS with Airflow

- Airflow triggers daily → Records EBS (on Raspberry Pi 4) → Uploads to Dropbox → Listened to via mobile device

- Ghost blog installed on Raspberry Pi 4

- Blog is set up, but no posts have been published yet

- Backing up

docker-compose.ymlto Google Drive using rclone

- Recording EBS with Airflow

7. Current Issues

- Despite having various applications, their utility feels underwhelming.

- Reason: Many setups were done out of curiosity ("just to try"), resulting in a lack of detailed or deep progress .

- Application-Related Needs:

- Prometheus:

- Need to set up notifications for when exporters go down.

- Grafana:

- Currently using standard dashboards for each exporter, which are not very visually striking.

- Viewing dashboards through the web page is inconvenient as they are not immediately noticeable → Would prefer to integrate with Slack for alerts.

- Some exporter data doesn’t match the expected values → Requires testing and validation.

- Airflow:

- Using the default Docker Compose setup, but DAGs sometimes don’t appear in the web UI, possibly due to high resource consumption.

- Older database values from a previous version (unspecified) seem to be causing

reference DAGsto persist and not be deleted automatically.- Manually deleting the reference DAGs seems to work, but is not disappear(show again).

- I deleted all reference DAGs with airflow db init and reference flag false

- Logs generated by Airflow accumulate excessively → Plan to create a DAG to manage log cleanup.

- Docker:

- Other Docker setups are fine, but Docker on the Mac consumes an excessive amount of space.

- The system on the Mac takes up 150GB, and it’s unclear where this usage originates.

- Other Docker setups are fine, but Docker on the Mac consumes an excessive amount of space.

- rclone:

- Currently refreshing Google Drive API tokens manually once a week.

- This is likely because it’s a test account. Moving to production requires meeting strict personal information verification requirements, which has been avoided so far.

- Although refreshing the token weekly is not a major burden, it is quite annoying.

- Currently refreshing Google Drive API tokens manually once a week.

- Prometheus:

- Hardware-Related Issues

- The SD card of the Raspberry Pi 3 failed, causing it to not boot.

- Since most of the servers I have are not in perfect condition, this issue is likely to occur again at any time.

- Based on this experience, I use

docker-compose.ymlto install and run most applications.- This allows the applications to be controlled via the YAML file, and by simply backing up this file (to Google Drive), reinstalling the applications later becomes much easier.

8. Conclusion

- This article organizes the technical environment currently being experimentally operated at home.

- I plan to update the content as I improve current issues or apply new ideas.

- I am considering sharing the settings for each application and the

docker-composefile, excluding some information.